5. AI now a board-level imperative for public companies and investors

Data law trends 2026

In brief

AI has moved from a technical consideration to a board-level imperative for public companies worldwide. The opportunities and risks it presents carry profound implications for strategy, operations and investor relations and demand active oversight. As 2026 approaches, boards must not only manage AI but also be ready to articulate their approach clearly and convincingly to the market.

AI is reshaping business operations

![]()

AI is not just a tech issue, it’s a core tool and risk that demands board attention across industries.

Alejandra Lynberg, Senior Associate

This wave of adoption spans not only generative AI – such as the large language models popularized by platforms like ChatGPT – but also more established applications, including automated and algorithmic decision-making. As a catalyst for efficiency and innovation, successful AI adoption can create new paths for growth. But when competitors or new entrants move faster, it can just as easily upend incumbents.

These twin dynamics have made AI a core concern for public company boards. Their role is to steer organizations through this technological transformation and to ensure risks and opportunities are disclosed in ways that stand up to scrutiny from investors and regulators alike.

From risk disclosure to growth story

The impact of AI is so significant that it is changing how companies explain their use of the technology to investors, including in annual reports. Across the UK, US, and EU, public companies have materially increased AI-related disclosures.

In the UK, the number of statements on AI in annual reports rose by 12% last year. In the US, the proportion of S&P 500 companies disclosing board oversight of AI or board-level AI competency jumped more than 84% over the past year.

Much of this reporting has focused on risk. In the UK, the Corporate Governance Code requires boards to assess and manage business risks. Similarly, in the US, Securities and Exchange Commission (SEC) regulations compel disclosure of material risks. AI is increasingly treated as one of those material factors. In the EU, direct AI risk disclosure is still emerging, but the Corporate Sustainability Reporting Directive is already pushing companies to report on technology-related risks and opportunities – including AI’s potential to cut energy use, or conversely, drive higher demand. Member States such as Germany have additional specific regulations, such as the German Corporate Governance Code, the Stock Corporation Act, as well as the German Commercial Code and the German Accounting Standard.

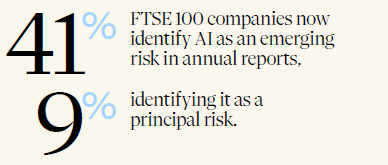

The trend is visible in the numbers:

(Freshfields data, August 2025)

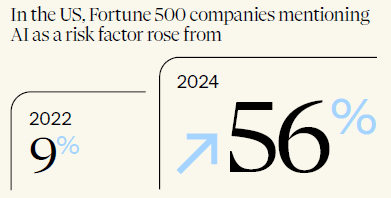

('The Rise of Generative AI in SEC Filings,' 2024)

Investor communication is expanding beyond AI-related risks. Companies are also outlining how AI is being integrated into operations, how it is affecting costs and resourcing, and whether they are making strategic investments in the technology in annual reports.

![]()

AI governance is developing swiftly. A company’s success will increasingly depend on combining structured oversight with AI competency, strong governance frameworks, and a clear focus on delivering value while managing risks.

Rachael Annear, Partner

Investor activism takes aim at AI

Proxy advisors are pushing for enhanced disclosures on how companies are implementing AI, the risks it creates and the role of boards in overseeing it – pressure now visible across jurisdictions. For example, in the US, shareholder activists are demanding more transparency. The trade union federation AFL-CIO has submitted proposals at Apple, Netflix, Comcast, Warner Bros and Walt Disney, seeking detail on how AI is being used. At Apple’s 2024 AGM, 37.5% of investors backed the AFL-CIO’s call for AI ethics disclosures – a level of support that signals growing momentum.

AI-washing: the next enforcement flashpoint

As companies set out their AI strategies, they must navigate the risk of ‘AI washing’ – exaggerating AI capabilities to gain a competitive advantage or appeal to investors. This practice is now firmly on the enforcement radar globally. Companies making statements about AI – whether in marketing or mandatory disclosures – must ensure their accuracy.

In the US, the SEC has already acted. In 2024, two investment advisers settled the first AI-washing enforcement cases for false or misleading claims, paying US$225,000 and US$175,000 respectively, alongside censures and cease-and-desist orders. In 2025, the SEC’s Cybersecurity and Emerging Technologies Unit declared AI-washing a core enforcement priority, targeting misleading representations by both public companies and startups – particularly the practice of rebranding rule-based automation as ‘AI’ or overstating capabilities of predictive analytics and chatbots.

![]()

We will see increased scrutiny of regulators in the field of AI. For example, the US SEC is closely monitoring AI use in companies, financial services, trading and corporate disclosures. It has also refocused and renamed the Crypto Unit of their Division of Enforcement as the Cyber and Emerging Technologies Unit, which now prioritizes AI-related investigations and actions.

Beth George, Partner

Europe and the UK are moving in the same direction. While AI-washing is not yet addressed in a single statute, a combination of the EU AI Act, consumer protection laws and national advertising standards provides a strong enforcement toolkit. Companies making unsubstantiated AI claims risk fines, reputational damage and even civil or criminal liability, depending on jurisdiction. Under the EU AI Act, providers of high-risk AI systems must meet stringent transparency requirements, conduct conformity assessments and notify deployers. Misrepresentation can trigger fines of €7.5m or up to 1% of global turnover, whichever is higher.

AI oversight moves into the boardroom spotlight

Investor scrutiny is increasingly focused on how boards oversee AI integration and deployment. This expectation is being formalized in regulatory and advisory frameworks across all three regions.

In the UK, the Financial Reporting Council’s guidance highlights controls over new technologies – including AI – as potentially ‘material’ for the purposes of a board’s declaration on the effectiveness of a company’s material controls. In the US, influential proxy advisors such as Glass Lewis now explicitly expect disclosures on board-level AI governance. In the EU, authorities including the European Securities and Markets Authority are issuing similar recommendations on AI oversight by corporate management.

Despite this pressure, governance structures remain in flux. Among FTSE 100 companies, only 7% of boards retain full oversight of AI, 19% delegate responsibility to the audit or risk committees, and 16% have dedicated AI committees. The remainder either assign AI to general committees or leave governance undefined. The picture is similar in the US, however, in 2024, 89% of S&P 500 companies had not expressly disclosed the assignment of AI oversight to either the full board or a committee.

![]()

As is often the case in the field of AI, the process of board disclosures and AI oversight requires iterative risk assessment and management. Regulators and investors worldwide expect ongoing reviews that produce updated policies and procedures, training and disclosures.

Giles Pratt, Partner

In the EU, there are not yet reliable figures on how AI oversight is distributed within companies. The AI Act itself does not mandate a particular governance model, leaving companies free to appoint internal or external officers – such as AI, IT security or compliance officers. Ultimately, members of the management and supervisory board must have the expertise to critically assess and guide strategic decisions on AI. They are under a duty to examine the potential applications of AI models and, where appropriate, adjust corporate strategy in response to new developments. The high degree of regulatory scrutiny around AI means that boards must address not only traditional productand sector-specific requirements, but also obligations under data protection law and the fast-emerging body of AI regulation.

Three pillars of effective AI governance

To manage risk and capture opportunity, forward-thinking boards

are adopting comprehensive AI governance strategies built

around three priorities:

![]()

Boards must lean into scrutinizing how their businesses use AI to mitigate risks, capture value, and give investors confidence in relation to corresponding processes.

Zofia Aszendorf, Senior Associate

- Navigating regulation: The global AI regulatory landscape is fragmented. Boards must contend with the EU's risk-based AI Act, a growing patchwork of US state laws and the divergent approaches in jurisdictions such as the UK, South Korea and China. This complexity requires boards to work closely with their compliance and legal teams to ensure oversight keeps pace with evolving risks.

- Building strong governance: Effective oversight starts with a clear internal framework that applies across markets. Boards need timely, relevant information – spanning product development, AI deployment, governance and compliance – to challenge management and hold AI operations to account.

- Scrutinizing high-risk use cases: Boards should give particular attention to the AI applications most likely to create legal, operational or reputational risks.

![]()

Companies are recognizing the growing need for structured AI oversight at the board level — and rightly so. It has become a strategic necessity, not just an add-on.

Theresa Ehlen, Partner

Looking ahead

As AI becomes more deeply embedded in business operations, boards will face intensifying pressure – both to manage the risks it creates and communicate a compelling and credible narrative to the market.

For companies with disclosure obligations, the task is to find clear and defensible ways to meet rising expectations from investors and regulators. Public companies should consider:

- substantiating all public AI claims, with proper documentation of how AI is actually deployed in the business and its services;

- strengthening disclosure and marketing review procedures, for example by creating cross-functional panels bringing together legal, product and marketing expertise;

- enhancing internal governance and compliance processes, including adopting and adhering to an AI governance framework; and

- planning for transparency and auditability, to comply with requirements under the EU AI Act and consumer protection laws.

Our team